Speed of face recognition in the brain vs LLM

How do brains process faces so fast and what are LLMs missing?

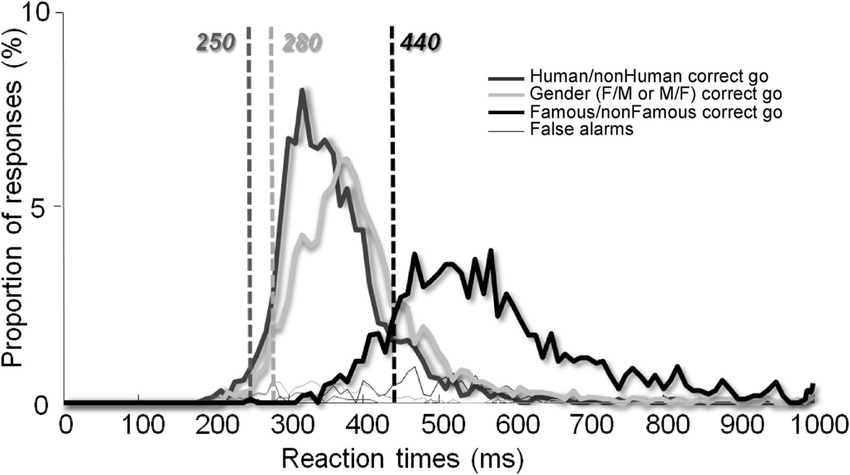

It takes 300-400ms from seeing a known face to lifting a finger. [1]

Simply physically reacting to a visual stimuli takes around 250ms for average people.

Which means that brains can handle the recognition part as quickly as in ~100ms!

The 100-200ms slow-down for the known-face recognition task pops up in other research as well: e.g. comparing it to the speed of just detecting a human vs animal face. [2, 3]

Or measuring brain responses using EEG.

That leads us to 2 following questions:

-

How fast is the information propagating through the brain?

-

How quick is the basic processing of image input?

Let’s start with the first one:

Speed of neurons

I wanted to compare the number of sequential steps brains can do in 100ms vs a traditional ANN but the more I read about the neurons the more complex it gets. So let’s take the high-level view:

Humans have chemical and electrical synapses:

-

chemical ones have a synaptic delay of 0.5-1ms.

-

electrical ones have a delay 0.2+ms

Even though neurons can fire every millisecond, they mostly reach only up to low hundreds of action potentials per second. That provides only tens of sequential “steps” possible in 100ms - with optional massive parallelization.

Therefore the processing cannot be too “deep” and must depend on very good feature extraction and/or parallelization.

How quick is the basic visual processing?

Humans are able to recognize basic concepts like “picnic” or “smiling couple” in images seen for only 13ms. 13ms is super short, 60fps has 16.6ms per frame = it’s less than 1 frame in a 60fps video.

While the reasoning about these concepts takes much longer.

(e.g. Is this a known face? takes 100+ms)

This points to an architecture that has 2 distinct steps:

-

Parse the visual input into basic features

-

Process the features with higher level reasoning

Generating the features is so fast compared to the relative slowness of the brain that it must be massively parallelized and is probably completely automatic.

This paper (and the image below) shows that distinguishing between a human and an animal face can take around 250–300ms, just above the average reaction time of 250ms. This suggests that early visual processing happens extremely fast = within 0–50ms, while recognizing identity or familiarity adds another 100–200ms. Interestingly, if the face is highly familiar or the task is practiced, the additional recognition time can be much shorter, which we’ll touch on in the next section.

200ms difference between detecting a face and recognizing it. (source)

Brains vs LLMs

Brains seem to have a highly optimized parallel processing of visual stimuli that is followed by a slower “reasoning” phase. This is somewhat similar to today’s LLMs with many self-attention blocks and long reasoning chains.

It’s worth noting that we can train the brain to get faster at the “reasoning” phase: e.g. recognizing a familiar face is 80ms faster than a non-familiar one.

This form of plasticity and inference pathways optimization is largely missing in currently used ANN architectures. However these are all active areas of research:

-

Continual learning trying to solve the trade-off between learning plasticity and memory stability.

- Commonly used fine tuning methods like LORA still struggle with forgetting.

-

Inference pathways optimization is possible by e.g. using Heterogeneous Mixture-of-Experts = experts of different sizes adapting to common but simpler tasks in the training data.

-

Orthogonal to architectural changes are test-time compute optimizations:

Generally speaking, we are much more successful with optimizations than solving the catastrophic forgetting which is one of the big open questions today.